Most colleges and universities have AI statements, but according to Inside Higher Ed’s Student Voice annual survey conducted last September, only 16 percent of students attribute their knowledge of when, how, and whether to use generative artificial intelligence in their coursework to university policies. About a third of students said they either learned about AI expectations from guidance stated in course syllabi (29%) or professors explicitly addressing the issue during class (31%), while others learned through institutional resources or solo exploration.

Surveys, like this Washington State University study, show that professionals (83%) expect recent college graduates to be prepared to effectively use AI upon entering the workforce. This fact has led many higher ed institutions to set policies conducive to AI literacy and skill development among students, making it harder for faculty to enforce unilateral AI bans.

The Issues with AI Ambiguity

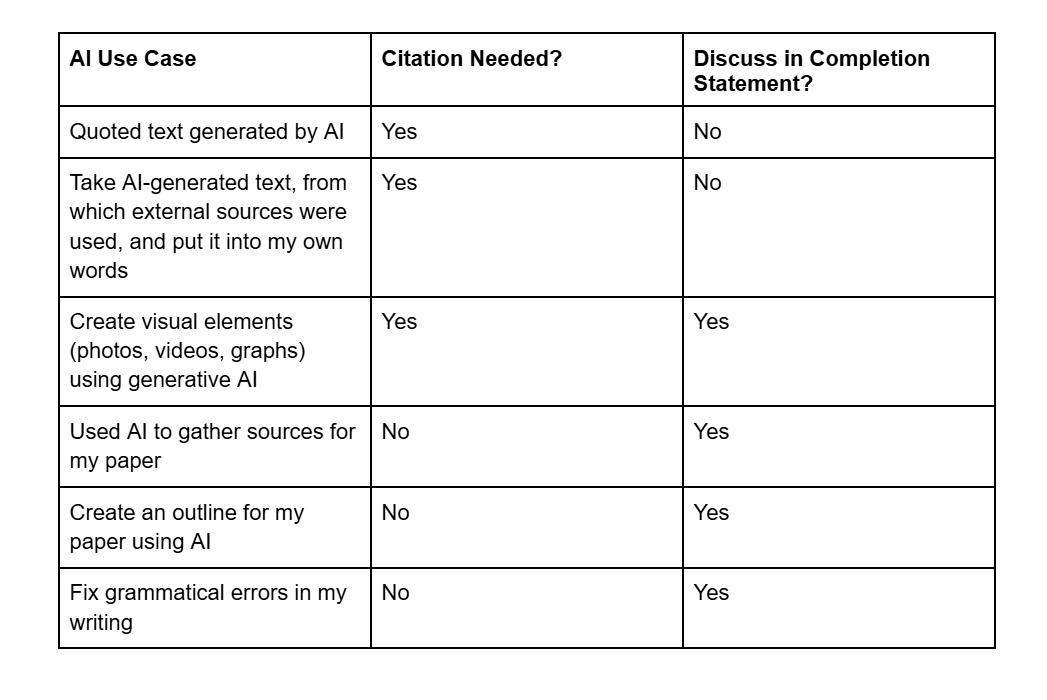

Widespread student ambiguity about when and how to use AI engenders several issues. One could assume that students who lack clear expectations will end up using AI in ways they think are fair (see image below), while others will utilize platforms to achieve their desired grades or learning outcomes with the least resistance.

This variance in use cases can widen skill gaps between students and make it harder for faculty to assess their work. Students might use Claude to generate a script for a class presentation, ChatGPT to turn their raw notes from class into an outline for a final project, or Grammarly to add the finishing grammatical touches to their mid-term paper, all use cases that might be approved by one faculty member but disapproved by another. In classes with unclear guidelines on AI use, the students who come into the class knowing how to use it well and ethically will benefit most, while the students who don't will suffer through lost time, lower grades, or academic misconduct violations.

So, if faculty are tasked with setting course-level AI policies, and workforce and institutional norms are dissuading faculty from outright banning these platforms, how should faculty enforce ethical AI use? If you want my best crack at this answer, and ways to address the challenges explained above, keep reading.

A Comprehensive AI Policy

Any efforts to maintain academic integrity are futile without a clear, transparent, and well-reasoned AI policy. I’ve used my experience teaching this year—a new faculty orientation course this Spring, and a high school technology course this summer— to create such a policy, one that I think translates to a variety of course levels and subjects. I’ve pasted it below, and I’m also sharing it as a Google doc for you to download, edit, and share with others.

AI Policy

Acceptable Use Cases

You’re encouraged to use generative AI platforms to support your learning and completion of assignments, projects, and assessments. Many employers expect recent graduates to ethically and effectively use AI in the workplace, and using these platforms to complete aspects of your work that aren’t explicitly stated in the assignment rubric allows you to devote more attention toward achieving the learning outcomes stated in the rubrics.

The following uses of AI are acceptable in this course:

Information gathering and verification: Conduct quick information searches, then verify accuracy by checking the original sources to ensure the AI's summary aligns with what those sources state

Source analysis and extraction: Summarize and extract information from text, video, and audio sources

Technical assistance: Receive step-by-step assistance on troubleshooting software used to complete assignments

Writing support: Generate outlines for papers based on your own thesis statements, arguments, and analysis; ask AI to pose rhetorical questions to aid your ideation process and give feedback on early drafts based on the assignment rubric

Minor grammatical changes: Improve spelling, punctuation, word choice, and succinctness of your writing

Personal work synthesis and study support: Generate summaries of your own notes, drafts, or completed work to deepen your understanding of a topic; use your own notes and work to create customized study materials and simulate scenarios where you’re applying the relevant skills

Initial issue exploration: Use AI to identify the primary arguments and perspectives surrounding a topic as a starting point for deeper investigation, recognizing that your responsibility is to conduct thorough research, evaluate evidence, and construct well-reasoned arguments

Data visualization: Design charts and graphs that simplify complex data, theories, and concepts

Citing AI

You’re only expected to cite AI when AI-generated text is used in your work. Similar to human-created work, any AI-generated text you put into your own words should be cited using APA 7th Edition. Here’s how to structure your citations:

Format: Company that made the tool (date text was generated). AI tool (version of tool) [Large language model]. URL.

In-text citation example: (OpenAI, 2023)

Bibliography: OpenAI. (2023). ChatGPT (Mar 14 version) [Large language model]. https://chat.openai.com/share/dccb3610-1db9-4eed-88b1-cdb06f67982a.

You’ll be required to submit a completion statement for all assignments and projects except discussion posts and personal reflections. It is in this statement that you’ll be asked to describe whether and how you used AI, even if just to generate an initial outline or correct grammatical errors. This statement is intended to help you learn about what is and isn’t working well in your assignment completion process and reflect on the tools and learning strategies you’ve deployed. They also help me understand what resources and points of feedback would be helpful for your development.

Concerns and Plagiarism

AI results can be biased and inaccurate. Additionally, tools will use any content you share, including personal data. Using generative AI in ways that extend beyond these acceptable use cases increases the likelihood of biases and inaccuracies showing up in your work, and can create habits that compromise the wellbeing of the people you work with and for. Interpreting subtle communication cues, applying context-specific knowledge, empathizing with others, creative problem solving, and ethical decision-making grounded in personal values and lived experiences are all crucial workplace skills that AI can’t yet deploy. Using AI in the ways described in the Acceptable Use Cases section ensures you continue to develop in these areas.

The following characteristics are evident in AI-generated work in which human oversight is lacking or missing:

Overuse of adjectives and em dashes

Exclusive use of active voice (i.e., “There are better ways” instead of “There are ways that are better.”)

Descriptions of concepts that lack plausible, highly detailed examples informed by lived experience

Fabricated sources or untrue facts

Vague statements (i.e., “Policy makers are influential in local communities” instead of “State senators pass legislation that directly impacts citizens of Baltimore City”)

Distorted or inconsistent formatting of text and visual elements

Inconsistent diction, structure, and flow, as if one or multiple paragraphs were written by another author

Morally-ambiguous writing; writing where the author lacks clarity, conviction, intent, or emotional rhetoric

Writing quality that doesn’t match the writer’s years of experience in the field and the course level

Work containing one or multiple of these attributes will be subject to review using plagiarism detection software, and the student may be asked to resubmit the assignment and their assignment completion statement to meet the assignment criteria. If you have any questions about this policy, or recommendations on how to make this policy more conducive to your learning, please contact me during office hours, via email, or by appointment.

Platforms and Resources

You will find a list of common large language models (LLMs) on the “AI Resources” page in our Canvas course. For additional information on which platforms to use and how, visit the Using AI section of the Center for New Designs in Learning & Scholarship’s Artificial Intelligence (AI) Toolkit.

Sources

Anthropic. (n.d.). Claude (July 27 version) [Large language model]. https://claude.ai/share/4272db8a-3f34-47f4-8072-bbbc24bcea06

Council of Independent Colleges. (n.d.). Consolidated AI Policy Documents. Retrieved July 27, 2025, at https://docs.google.com/document/d/1Lj2GKEYvzKj6VQCLsp7xjhA-M2UhWmTky5REJL30rPo/edit?tab=t.0#heading=h.7cb9dkx6nkvw

Georgetown University Center for New Designs in Learning & Scholarship. (n.d.) Using AI - Artificial Intelligence (AI) Toolkit. Retrieved July 27, 2025, at https://cndls.georgetown.edu/resources/ai/using-ai/

Perplexity. (n.d.) Perplexity (July 27 version) [Large Language Model]. https://www.perplexity.ai/search/i-was-on-a-call-with-a-profess-OLCEceJ7RMen1rA3YeOfBQ?0=d

University of Maryland University Libraries. (n.d.). Research Guides: Artificial Intelligence (AI) and Information Literacy: How do I cite AI correctly? Retrieved July 27, 2025, at https://lib.guides.umd.edu/c.php?g=1340355&p=9896961

University of Washington. (2025, April 28). Sample syllabus statements regarding student use of artificial intelligence - Teaching@UW. Teaching@UW. Retrieved July 27, 2025, at https://teaching.washington.edu/course-design/ai/sample-ai-syllabus-statements/

This is the type of policy that, I’m hoping, can enforce itself. Students in my courses know I’m not draconian about AI use, that the policy they’re being asked to follow reflects my consideration for the skills they’ll be expected to use in the workplace, and that I openly use AI in my teaching (and model that transparency through the reference list at the end of my syllabus) and they can come to me and their classmates for guidance.

Most importantly, they know an automatic failing grade or honor board meeting isn’t on the other side of their misuse; instead, they get a discussion with me that helps them understand why their unauthorized use won’t fly in the workplace. When I ask them to put a meeting on my calendar to discuss their work, they know it’s because their work appeared to include one of the tell-tale characteristics associated with AI-generated work that lacks human oversight. In some instances, it’ll give students who didn’t end up misusing AI to explain why their work may appear to be artificial, opportunities for me and them to learn about ways to improve organic work in the age of AI.

I took this approach because it avoids the human black box of my own bias. When I ask faculty what distinguishes work that is entirely or majorly generated by AI from human-generated work, they say things like, ‘the writing is too perfect’ or ‘it lacks personal experiences.’ I hope that offering students something more concrete from the beginning allows them to identify their mistakes and see how their lack of oversight negatively impacts the people they’re aiming to help. This approach aims to eliminate the unspoken ‘expert’s intuition’ (“I know it when I see it”) with which faculty accuse students of AI misuse.

I offer a mild rationale for all of this: why I’m allowing them to use AI in my class, why I’ve authorized some use cases and not others, why human judgment is important in academic work, and why I ask them to submit a work completion statement with their major assignments. My policy isn’t defended with dissertation-level rigor; that’ll come with time, when we have more peer-reviewed studies on perceptions and effects of AI in the workplace, and when I have more time to dig into what’s already out there. But I’m hoping these justifications begin to resonate with my students as they focus on these topics in class and reflect on their learning throughout the semester.

How does this AI policy—and approach to dealing with student breaches of it—align with how you plan to approach AI this coming school year? Which parts of this plan are incomplete, need more investigation, worked and haven’t worked in your experience? Let me know in the comments below; I’d love to hear!

If you enjoyed this piece, you’ll also enjoy: